The Pong GPU: Making a Graphics Card from Discrete Logic

Now that I had laid out the architecture for the MCPU-1, I wanted a way to interface some sort of I/O with it. Basic homebrew CPUs typically use something like a simple 2 line LCD or a few seven segment displays. Alternatively, there is also the option of connecting serially with a modern PC through an Arduino or something similar. However, I wanted the ability to draw arbitrary images without the constraints of a text-mapped display. My original idea was to use an LED matrix, but this would require a lot of processing overhead from the CPU as it would have to draw out every pixel to the matrix in code. Instead, I elected to make a complete display driver that could be connected to a modern monitor.

GPUs like the one I sought out to build work on a fairly simple principle of operation. Each pixel to be drawn is stored in a section of the GPU’s memory called the framebuffer. This framebuffer should also be accessible to the CPU so that the processor can send the image it wants to draw to the GPU. When it’s time to draw a frame to the display, the GPU simply scans through the framebuffer and writes each pixel to the screen when the display requests it. The benefit of this solution is that once the CPU writes its data to the GPU’s framebuffer, it doesn’t have to worry at all about the additional processing required to draw the framebuffer to the screen, as that will all be handled in parallel by the GPU.

Because this frame memory must be accessible to both the GPU and the CPU, there is one problem that can arise. If our memory only has one data I/O port, it can only talk with either the CPU or the GPU at any given time, and not both. This means that the framebuffer would be inaccessible to the CPU whenever the GPU needs to access it. While this is a bad enough problem on its own, it is compounded by the fact that the MCPU-1 has no interrupt controller, meaning that it cannot automatically detect when the GPU’s memory is free without explicitly checking. To overcome this, we can use something called dual-port RAM. Dual-port RAM is uniquely suited for VRAM because, as the name suggests, it has two I/O ports instead of one, meaning that two devices can read and write to it at the same time, provided they’re not trying to access the exact same address at the exact same time.

This design decision will also influence the constraints of our GPU’s resolution and color capabilities. Through-hole dual-port ram only comes in 1 Kilobyte chips, meaning that our framebuffer can be no larger than 1024 bytes in total. If we were to have one byte per pixel, this would limit us to a display size of \(\sqrt{1024}\) pixels square, or 32 x 32 pixels. This would only be sufficient for displaying the simplest of images, and would be useless for text. For comparison, the sprite of Mario from the original Super Mario Bros game is 16 x 13 pixels, meaning that we could only fit 4 Marios on our screen.

Instead of dedicating one byte to each pixel, we can instead split up each byte into multiple pixels. If we want to forgo color altogether, we can make each byte represent 8 pixels, with a 1 being pure white and a 0 being pure black. This will give us a total possible square display size of \(\sqrt{8192}\) pixels, or around 90 x 90. However, to chose our final display size, we need to take a look at the various display protocols in use these days.

The three most common display protocols in use today are VGA, HDMI, and DisplayPort. However, HDMI is proprietary, and even worse: DisplayPort is packetized. Therefore, the obvious choice for our purposes is VGA.

Unlike HDMI or DisplayPort, VGA is an analog display format, meaning that the color shown on screen for a given pixel is a function of the R, G, and B voltage levels at that moment in time. Like nearly all television and display formats dating back to the early 1900s and earlier, the image on the screen is drawn from left to right, top to bottom, one pixel at a time. In between each vertical line, the display will expect to receive a signal called the horizontal sync pulse from the display driver. This sync pulse helps correct for small timing errors in the video circuitry, which prevents the image from becoming warped as it is drawn to the screen. Additionally, it serves to let the monitor know which resolution it is being driven at. Likewise, a much longer vertical sync pulse is expected at the end of each frame. The timing diagram for these waveforms is shown below:

The VGA protocol standard defines expected timings for each resolution supported by VGA displays. It is these timings that will affect which resolution our GPU will support. In order for a given resolution to be valid, all of its pixel timings must be evenly divisible by whatever our desired resolution will be. The smallest conventional supported VGA resolution is 640 x 350, which is obviously way too large for our measly 1K framebuffer. To get around this, we need to find a resolution that we can divide by a power of two in order to get a smaller resolution. Luckily for us, the industry standard VGA resolution of 640 x 480, is perfect for this. We can divide the amount of pixels per line by 8 in order to achieve a resolution of 80 x 60. This way, our GPU will draw the same pixel 8 times before moving onto the next bit in the framebuffer. The table of display timings for this resolution is shown below:

Note that while we divide the horizontal pixels by 8, there is no easy way to divide the line count in the same way, since 33 is not divisible by 8 or any lower power of 2 other than 1. Hence, we must count each line for the purposes of generating our pulses. However, like with pixels, we will draw the same line 8 times before moving on to the next set of addresses.

So, we now have a plan for our GPU’s specifications. It will be an 80 x 60, 60 FPS, monochromatic display. This may not seem like much, but it is sufficient to emulate early video games on our CPU, namely CHIP-8 games, which call for a resolution of 64 x 32 monochromatic pixels. We can see a CHIP-8 game being run on a Telmac 1800 below:

Now that we know what the resolution of our GPU will be, we can go about constructing it. I chose to first create the circuitry to generate the sync pulses before moving on to actually drawing an image. This way, I could confirm that the display was properly recognizing the signal. First, I needed to build the horizontal timing circuitry. Before any timing circuit can be built, we need to construct the clock. The VGA timing for 640 x 480 @ 60 FPS calls for a 25.175 MHz clock, however we must divide this clock signal by 8 before we can use it, since our final resolution will be 1/8th of that in the standard. Luckily, this is very easy to do using a simple digital clock divider circuit. If we use the 25.175 MHz pulse to drive a 74HCT163 binary counter, we can connect the counter’s third output bit to the pixel clock, which will give us the desired 3.146875 MHz signal needed to get our 80 x 60 display.

The horizontal timing circuit consists of a series of two cascaded 74HCT163 counters to give the total 7 bits needed in the horizontal line. These counters are fed by the clock divider which increments them approximately once every 0.3 microseconds. However, the two 8 bit counters naturally overflow back to 0 after reaching 255, but we want them to reset after reaching 100 as per the timing table above. In order to accomplish this, we can use another 74 series chip: the 74HCT30. The 74HCT30 is an 8 input NAND gate, meaning that it will always output a zero unless all of its inputs are a one. We can use this property to create a decoder for each state that the horizontal timer needs to keep track of during its progression. Since each horizontal line will need to switch states four times, we need a total of 4 74HCT30s in the horizontal timer circuit, with their inputs connected to the output of the counters. In order to decode for each state, we can connect each necessary line in the horizontal counter bus to a NOT gate and feed the proper combination of inverted and non-inverted lines into each NAND gate. This way, the gates will always output a 1 except for at the exact pixel count where they become a 0. Since the reset for the 74HCT163 is active low, we can tie this to the output of the endline decoder in order to reset the pixel count as soon as the counters reach 100.

Finally, we need a way to switch states on and off using the triggers from the decoders. An easy way to do this is with an SR (set-reset) latch. By combining two 2-input NAND gates together in a specific way, we can toggle a state bit on and off in order to send out control pulses from our horizontal circuit. The two signals we need are are our horizontal blanking signal and our horizontal sync pulse. VGA sync pulses are active low, meaning that they should be a 0 when the GPU is sending out the pulse and 1 otherwise. Likewise, for reasons we’ll get to later, our horizontal blanking signal should also be active low. Horizontal blanking is used to prevent the GPU from drawing to the screen after the display’s scanline has left the visible area of the screen. This is important since drawing “off-screen” is undefined behavior for VGA displays, and could produce undesirable artifacts in our picture. So, we’ll hook up the latches for our pulse generation to the decoders from the horizontal timing circuit to get the following:

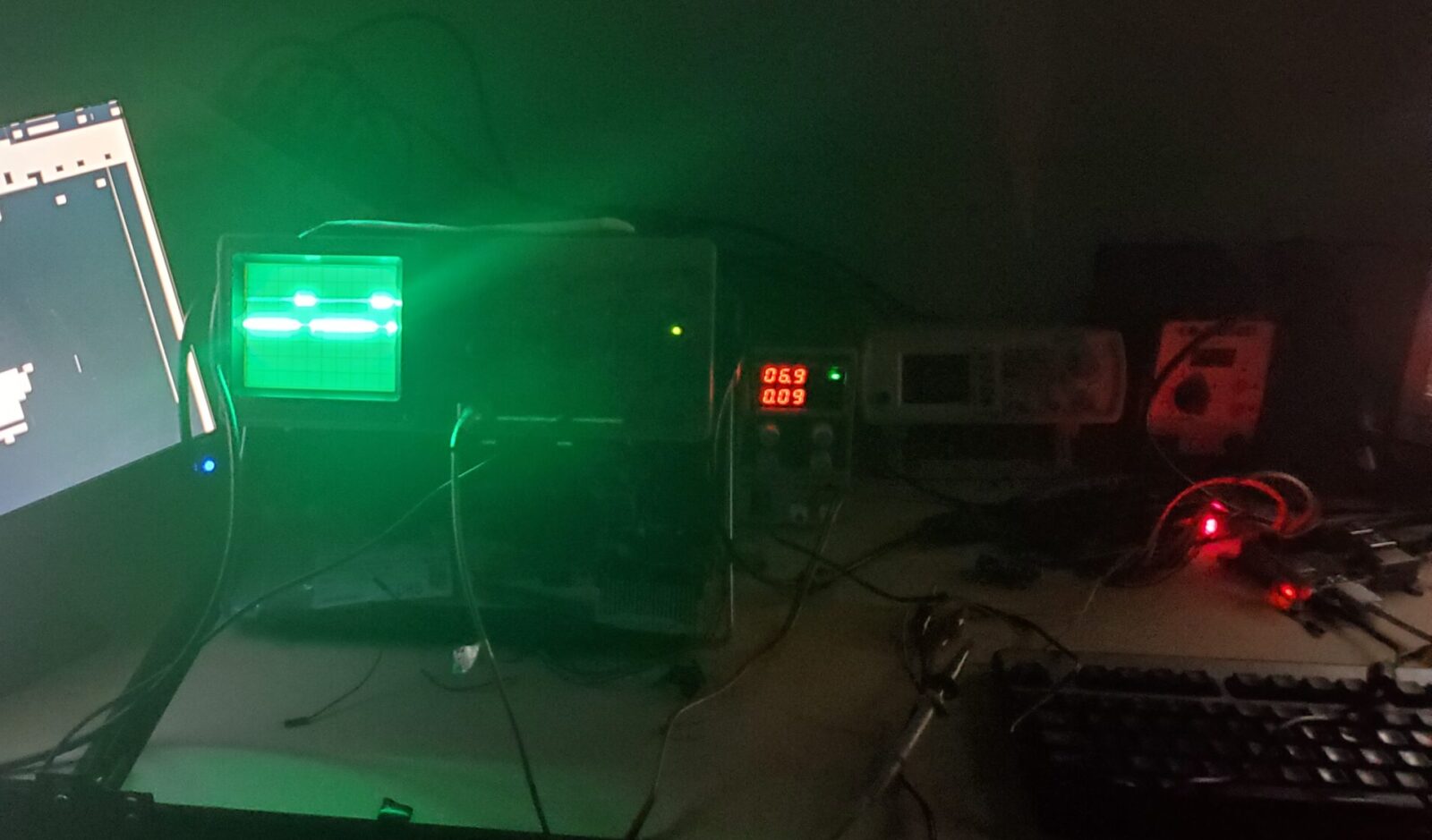

After laying out the plans for the horizontal timing circuit, I started to build it on a breadboard. I first connected LEDs to the outputs of all the decoders and drove the circuit with a signal generator to make sure all the right states were being hit at the right time. After that was confirmed, I attached the SR latches and looked at the output waveforms on my oscilloscope, which both appeared to be behaving properly:

Here we can see that the horizontal circuit correctly enters the blanking interval before sending out the sync pulse. Note that the sync pulse is inverted from how it normally should be in this image; this will be fixed later on.

After completing the horizontal timing circuit, it was time to move on to the vertical timing. The vertical timing circuit is very similar to the horizontal circuit in terms of its basic operation, although some key changes need to be made. For one, the vertical circuit doesn’t keep track of how many pixels have been drawn to the screen, it instead counts lines. Therefore, it is only incremented after it receives a pulse from the endline decoder. Additionally, because we can’t divide the line count by 8 like we can with the pixel count, we will need a total of 10 bits to keep track of the line count, which necessitates the use of a third counter.

We also may notice that while our NAND gates can only take an input of 8 bits, we have 10 bits that we need to decode in this circuit. Luckily for us, we can get around this by exploiting some of the patterns in the numbers we need to trigger our states at. For one, the first three binary integers we need to decode start with four consecutive 1s followed by a 0. We can reduce these four bits into a single bit using an AND gate cascade, meaning that our decoders will only need to consider 6 bits instead of 10 (we ignore the MSB because it will never reach a state where it will be 1 and the former conditions are also true). Finally, the endframe decoder gets triggered when the horizonal count has a leading bit of 1 followed by 5 zeros. Because the counter gets reset to zero at this point, we don’t need to account for conditions where any of those five successive bit positions are anything but 0. This means we can simply evaluate the MSB and the 4 least significant bits to determine if we are at the end of a frame. The final circuit is shown below:

Now that we have a means of generating both our vertical and horizontal sync pulses, a monitor should be able to detect the signal from the GPU if we hook it up to a proper VGA connector. Much like the horizontal timing circuit, I constructed the horizontal timer on some additional breadboards and linked the two together. After that, I attached a VGA jack to the circuit and hooked it up to a monitor to test.

As I powered on the circuit, the monitor said it detected a 640 x 480 VGA signal at 59 FPS, which means that my sync pulses were being correctly generated. Next, in order to test that I could draw an image to the screen, I hooked up the R, G, and B signals to different timer outputs on the horizontal circuit, which generated the following pattern on the screen:

Now that I had confirmation that the timing circuits were working properly, the next step was to hook up the VRAM for the framebuffer and begin drawing real images to the screen. Addressing each pixel works by taking bits 9-3 of the line count as the high order bits for the framebuffer address and then using bits 6-3 of the pixel count as the low order address bits. The final three bits of the pixel count are used to control a multiplexer that sends each bit of the current byte in the framebuffer to the monitor one by one. When the mux reaches the end of the byte, the memory address is automatically incremented to the next byte. This of course means that only 600/1024 bytes in our VRAM are actually used, but this is fine since the CPU can use the unused indices for general storage if need be, thanks to their dual-ported nature. The full VRAM circuit is shown below:

Since the GPU is monochromatic, all three RGB signals are tied to the same mux output. Since the VGA standard defines an internal voltage divider of 75Ω with a 0.7V maximum, we must connect each color channel through a 470Ω resistor in order to properly drive the display. An optional potentiometer is also put in series with the output to adjust screen brightness if desired. Now that we have the full circuit laid out, I assembled the rest of the GPU on breadboards to arrive at this final product (the bottom left corner is censored as it contains a spoiler for later in the article):

Now that we have our framebuffer interfaced with the GPU, we should be able to see a blank screen when we power on the GPU therefore be able to write bits into the framebuffer and onto the screen. However, when we power on the GPU, we are greeted with the following:

The reason for this random distribution of pixels is fairly simple. The RAM chip we are using does not initialize its values to 0 on startup, meaning that the value of each bit is essentially random, giving us this static looking pattern we see on the screen. Therefore, we must manually clear each address in the framebuffer before we want to display an image.

The easiest way to do this is to use a microcontroller like an Arduino to interface with the GPU. To interface with an Arduino, I hooked all the necessary address and data lines to the GPU and programmed the Arduino to write data into the VRAM. After programming it to display a simple scrolling test image consisting of a “hello world” message and a space invaders alien, this was the result:

Now that I confirmed the breadboard version of my GPU was working, it was time to build a more permanent version. Since I had used EAGLE to lay out the schematic, the next logical step was to simply create a board from it. I decided to lay out the PCB for the GPU as a two layer board with a ground plane on one side and a +5V plane on the other. This gave me an easy way to power all the 5V logic chips in the circuit from a single source. The VGA connector and I/O pins were laid out in a similar fashion to how modern GPUs look, although this GPU obviously couldn’t be plugged in to a normal modern PC. For the horizontal and vertical buses, I laid them out on the same side of the board and then used via connections to the opposite side to distribute the proper signals to each chip that needed them. This greatly simplified the design and also gave the board a nice aesthetically pleasing look. Finally, the VRAM and output mux were placed close to the output connector and routed to their respective address lines. Below is the final EAGLE board view of the PCB:

When making the PCB, I decided to christen it as the Pong GPU, since its resolution and color capabilities translate well to the classic game of Pong. After sending the boards off to the manufacturer, they arrived about a week later. Once I got the boards, I transferred all the components from the breadboards into their sockets in the PCB and tested the circuit. It ran even better than it did before by nature of the fact that there was less parasitic noise on the properly routed and grounded PCB as opposed to the breadboards. Below is the final assembled PCB hooked up to a driver device:

Luckily, there were no routing errors on the first try, although I did have a few small issues. I ended up not having a trimpot that fit the footprint for the dimmer, so I bypassed it with a jumper. Additionally, the silkscreen for the LED ended up being backwards when I designed the part, which I later fixed upon detecting the issue on this board. Finally, the VGA jack footprint I used had the a different pitch than the connector that I was using, meaning I had to order a different brand’s jack in order to make it fit the board. After these small corrections, the board worked flawlessly though, and I’m quite happy with how it turned out.

Now that I had assembled and tested the GPU, the final step was to actually use it for something. Unfortunately, the CPU I designed it for doesn’t exist in physical form yet, so I had to find another application for it. I ultimately decided to try hooking it up to a Raspberry Pi. At first, I thought this would be a semi-complicated undertaking, but it actually ended up being quite simple. The OpenCV library for Python contains many useful tools for resizing and posterizing screen content, as well as converting frames into arrays. Using OpenCV, I made a script that would capture a given region of the screen or webcam input and convert it into an 80 x 60 posterized black and white image. That image would then be converted into the Pong GPU’s data format and pushed to the framebuffer. I first tested this virtually on my PC before downloading it onto a Raspberry Pi and trying it for real. You can see the final results of this below: